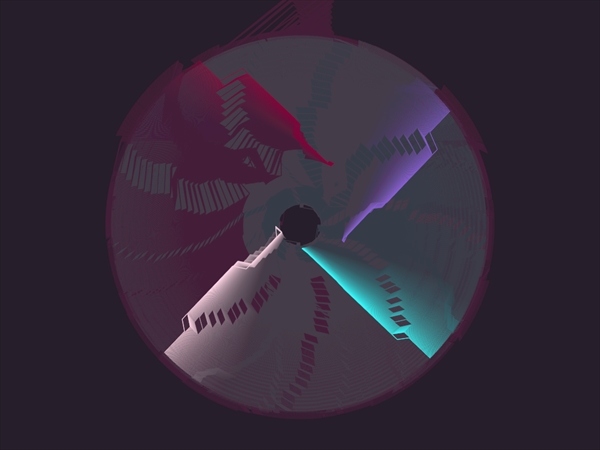

Pictured: A brainwave visualizer created with the Emotiv block While working at Fashionbuddha in June of 2011, I built an Emotiv wrapper for Cinder . We picked up a couple headsets as more of a novelty, but I became fascinated with them. I've always been intrigued by the inner workings of the mind and the EPOC is an affordable and accessible way to get a glimpse of what's going on in our skulls. I built an experiment called " Seccog ", which used eye movement and cognitive thought to drive a bouncy, ribbon-emitting ball around the screen. This was eventually refined into a two player game which you could play against an opponent or the computer (it can be seen here running in demo mode). Unfortunately there is basically no real demand for development with this device and the project was abandoned. The block I released at the time was a tad buggy and did not include sample projects, so it never really saw much use. I'm about to get cheesy. If you just want the block, skip ahead, but read on if you want some insight into why I think this technology is worth exploring... Much like buying a car suddenly makes you notice everyone else who has the same model, I've been paying attention to EEGs a lot more than I would have expected in the past few months. One thing I've learned about a lot in this time is the reported effectiveness, and expense, of neurofeedback (EEG biofeedback) therapy. We have a generation of inattentive children on our hands. Whether ADD / ADHD is just overdiagnosed, or if we've truly just given our children too many distractions too early in life, there is an at least perceived problem to fix, and most parents resort to medication. For a child's still-forming brain and identity, this can be damaging. Pictured: The Cognitiv sample project with EmoComposer Neurofeedback therapy provides a drug-free alternative that works in many but the most extreme cases. The concept is that you present a patient with mental exercises which require focus, then reward the behavior. The theta brainwave frequency range is what is amplified as you are drifting off to sleep. The beta range is what's highest when you are alert and focused. For some people, their beta waves never or rarely outweigh their theta waves. Using an EEG, you can measure when beta waves are higher than theta, and in turn perform an action which rewards the patient. This taps into the brain's reward path -- or addiction center. In other words, get them addicted to focus. The brain's plasticity will make it stick. Most neurofeedback programs are expensive and, in my opinion, pretty weak. With bleeding edge video game developers taking the attention of many children, it's difficult to create a competing product that will hold their interest. The EPOC has a lot of potential to mitigate cost for families who currently opt for medication because they can't afford neurofeedback therapy sessions to the point of effectiveness. Creating an easy and open source way to implement the device and sharing it with the creative programming community may, hopefully, inspire some talented artists to make something that the neurofeedback world has never seen. The bar needs to be raised and the cost lowered. This was my motivation to revisit the block. I've taken some time to revamp the code to perform better and make it easier to use. I've also created two sample applications which demonstrate the key components of neurofeedback therapy -- brainwave analysis and interaction with cognitive thought. GET EMOTIV FOR CINDER ON GITHUB The block includes two sample projects for Visual Studio 2010 demonstrating brainwave analysis and moving an on-screen object with cognitive thought. The "Cognitiv" sample is a pared down version of "Seccog". Just drop the contents of this zip into your "blocks" folder and try out the samples. The block requires the Emotiv SDK and the KissFFT block. The SDK cannot be distributed with the block, but there are a couple text files with clear instructions on what goes where. WHAT'S NEW? Static Library A really nice feature of the new block is the option to use either source code or a static linking library. The examples use the static library. This hides the guts of the block and speeds up build time. Explicit Pointer The old Emotiv block used what's called an "implicit pointer". It was a standard class with a shared pointer that did all the work. The new class simply returns a shared pointer for you to work with directly. Here's a quick run down on implementing the block. Below is a complete application which creates an instance of the Emotiv class, listens for events from the headset, and dumps data to the console. #include "cinder/app/AppBasic.h" #include "Emotiv.h" class MyApp : public ci::app::AppBasic { public: // Cinder callbacks void setup(); void quit(); // Emotiv callback void onData( EmotivEvent event ); private: // Emotiv EmotivRef mEmotiv; int32_t mCallbackId; }; // Setup void MyApp::setup() { // Connect to Emotiv engine mEmotiv = Emotiv::create(); if ( mEmotiv->connect() ) { console() << "Connected to Emotiv Engine\n"; } // Add Emotiv callback mCallbackId = mEmotiv->addCallback<MyApp>( & MyApp::onData, this ); } // Handles Emotiv data void MyApp::onData( EmotivEvent event ) { // Trace event data console() << "Blink: " << event.getBlink() << "\n"; console() << "Clench: " << event.getClench() << "\n"; console() << "Cognitiv Suite action: " << event.getCognitivAction() << "\n"; console() << "Cognitiv action power: " << event.getCognitivPower() << "\n"; console() << "Engagement/boredom ratio: " << event.getEngagementBoredom() << "\n"; console() << "Eyebrows up: " << event.getEyebrow() << "\n"; console() << "Eyebrows furrowed: " << event.getFurrow() << "\n"; console() << "Laughing: " << event.getLaugh() << "\n"; console() << "Long term excitement score: " << event.getLongTermExcitement() << "\n"; console() << "Looking left: " << event.getLookLeft() << "\n"; console() << "Looking right: " << event.getLookRight() << "\n"; console() << "Long term excitement score: : " << event.getShortTermExcitement() << "\n"; console() << "Smiling: " << event.getSmile() << "\n"; console() << "Smirking left: " << event.getSmirkLeft() << "\n"; console() << "Smirking right: " << event.getSmirkRight() << "\n"; console() << "Time elapsed: " << event.getTime() << "\n"; console() << "Device/user ID: " << event.getUserId() << "\n"; console() << "Winking left eye: " << event.getWinkLeft() << "\n"; console() << "Winking right eye: " << event.getWinkRight() << "\n"; console() << "Wireless signal: " << event.getWirelessSignalStatus() << "\n"; console() << "Brainwave - alpha: " << event.getAlpha() << "\n"; console() << "Brainwave - beta: " << event.getBeta() << "\n"; console() << "Brainwave - delta: " << event.getDelta() << "\n"; console() << "Brainwave - gamma: " << event.getGamma() << "\n"; console() << "Brainwave - theta: " << event.getTheta() << "\n"; console() << "---------------------------------------------\n"; } // Create the application CINDER_APP_BASIC( MyApp, RendererGl ) If you have trained a profile for user-specific facial and cognitive input, you can import their *.emu file as follows: // Load profiles from Control Panel's data location map<string, string> profiles = Emotiv::listProfiles( "c:\\ProgramData\\Emotiv" ); // Find my profile and load it onto the first device for ( map<string, string>::iterator profileIt = profiles.begin(); profileIt != profiles.end(); ++profileIt ) { if ( profileIt->first == "steve" ) { mEmotiv->loadProfile( profileIt->second, 0 ); } } A few other improvements: Code re-organized for better portability Now includes sample projects Project for building static library included Upgraded to Emotiv SDK 1.0.0.5 Improved thread management for better performance Cleaned up the destructor -- nothing to call or clean up when your application exits 1.0.1: Improved brainwave analysis speed and accuracy 1.0.1: Improved Brainwave sample 1.0.2: Code cleanup If anyone is interested in porting this over to Linux or Mac OSX, please let me know. I'd love to include it.