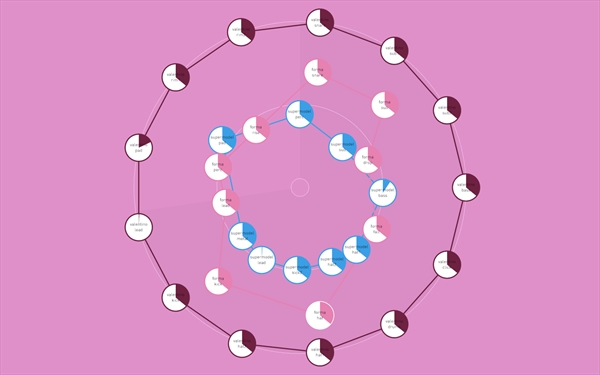

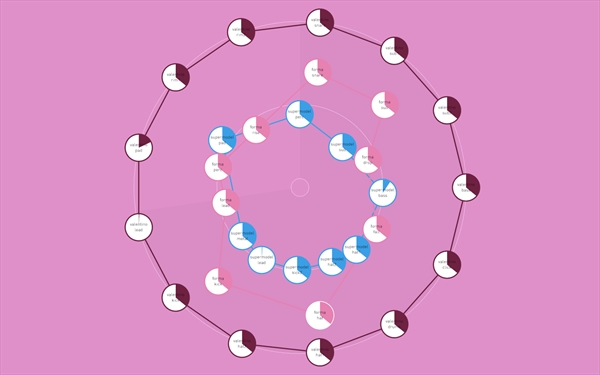

Pictured: The next generation of Radial On December 3, 2011, I debuted my new multi-touch live PA rig at the From 0-1 Artist Showcase . This new software began as a port from Radial , a computer-vision-based music performance package I developed on openFrameworks , Magnetic , and FMOD . The rendition here is now a Cinder application which uses Windows 7's native multi-touch and a capacitive screen. The accuracy, performance, and ease of setup is light years beyond Radial. The concept is simple. There are three rings on the screen. The outside ring represents the volume range. Anything outside this ring is silent. The middle ring is 100% volume. Moving sounds from the outer ring to the middle one is akin to sliding a volume fader from 0 to 10. The center goes back down to 0% volume and removes the sound. Dragging a sound from the outside ring to the center in a straight line would make it go from silent to full volume, back to silent, and then removed from the stage. Performing at the From 0-1 Artist Showcase, Part I The set data is stored in a very basic JSON file, which can be built automatically by scanning the asset directory. This software maintains a master clock, as indicated by a "doppler" type visual in the rings which loops every sixteen notes. Each sound also has its own "doppler" to represent its progress. By getting these sounds out of the traditional "grid" layout, I can crossfade elements of two or more songs, rather than cut one off to replace another as I'd have to do with other software. If there's a sound I really like that I want to leave in for a while, I can leave it on stage even when I'm finished performing a song. Collision detection between objects allows me to replace one sound while introducing another by pushing the old sound out of the way with the new one. Performing at the From 0-1 Artist Showcase, Part II This approach removes the constraint of channel count limits. I can load a track with twenty channels and not worry about being unable to perform it on an 8-channel controller. I don't have to associate clips on a computer screen with faders and buttons on a separate hardware controller, or deal with the latency intrinsic to such a set up. A single class receives touch data, renders the channel information to the screen, and plays the audio. There is no latency beyond the slight lag of the camera driver, so there is this feeling of a real connection to the audio.